Advanced AI: Our next odyssey?

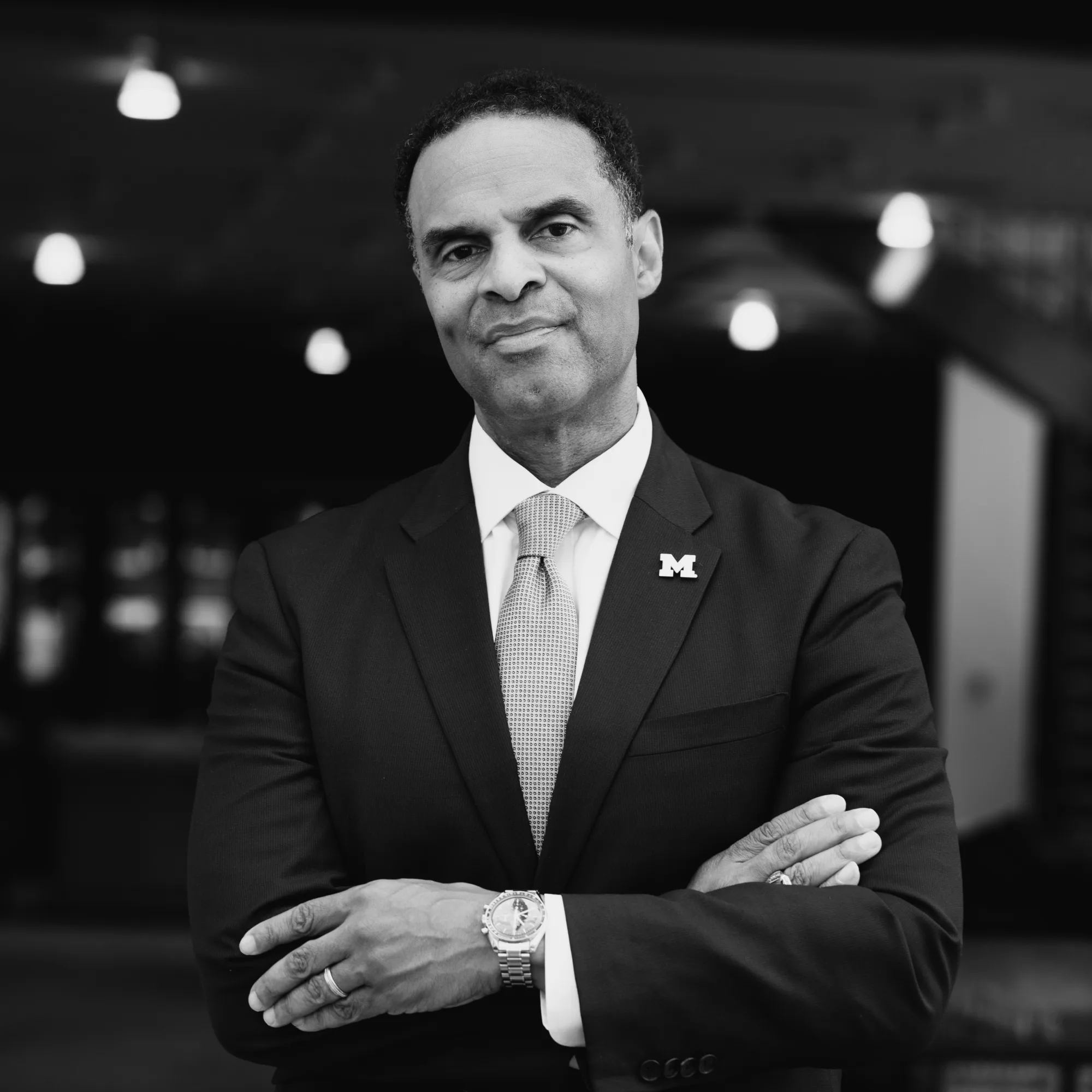

Dean of Engineering, University of Michigan

Since ChatGPT’s launch in November 2022, we’ve seen a proliferation of articles about AI agents across the media and academic landscapes. There are studies about its effects on productivity, commentaries on how higher education is grappling with its capabilities, and calls to establish shared safety protocols and governance. Of course, books and movies on AI go back decades. Is there anything left to be said?

As a fan of the film 2001: A Space Odyssey since I was four—and an even bigger admirer of Arthur C. Clarke’s novel—I have long been fascinated by the concept of space travel and applications of artificial intelligence. Clarke’s story focuses on science and technological advancement, yes, but also on the mysticism that sparks evolution. It explores what prompts a sentient being to innovate and evolve and evokes the sense of wonder that accompanies exploration. These deep conversations drew me to the field of space technology (advanced plasma propulsion to be specific) and caused me to become the person I am. One could say I’m biased toward AI.

As I think of ChatGPT and its successors, there are also deep conversations to be had about how society can best guide the technology to achieve maximal benefits for humanity. While I see the potential for good, I also understand the concerns.

With that in mind, and for a bit of fun, I asked the latest model, GPT-4, what it “thought” about the following topic: From the perspective of a space technologist, dean of a prominent engineering college, and lifelong science fiction enthusiast who read 2001: A Space Odyssey many times, what will engineering education look like in 2030 and 2050, and how will the role of engineers in society evolve as they create technology alongside powerful AI agents?

While short on specifics, its reply was remarkable. It called upon many tenets of my own College’s approach, which prioritizes engineering excellence, interdisciplinary collaboration, and equity-centered values. It wrote:

“As we navigate the complex landscape of AI technologies, ethical engineering and responsible AI development will become increasingly important. Just as HAL faced ethical dilemmas in the story, future engineers will need to make responsible decisions that prioritize the well-being of humanity.”

It had less to offer about the evolution of engineering education, but at least acknowledged its shortcoming:

“It’s less certain how engineering education will further evolve as we approach 2050. One possibility is a stronger emphasis on human-AI collaboration skills…This collaboration, reminiscent of HAL’s cognitive abilities complementing the human astronauts’ expertise, could require a strong focus on lifelong learning and adaptability.”

The evolution of technology in education is nothing new. When studying engineering, I had programmable calculators, access to a mainframe computer, and later a personal computer. Writing computer programs enabled me to do far more analysis than my parents could with slide rules. With access to better technology, I spent less time on rudimentary calculations and more on analyzing the results, which instilled greater insights on the phenomena I was studying. I was able to think (and therefore learn) more because I had to “crunch numbers” less.

I see tremendous potential, especially if educators evolve our instructional approaches.

Will AI agents, in a similar way, elevate students’ thinking? Will it free them from time-consuming activities of moderate utility? Or are those activities crucial in forming analytical thinking skills—especially when educating future engineers? Essentially, will it help educators guide learners in ascending the ladder that starts at data, rises to information, and then knowledge, and on to wisdom and ultimately insight?

I see tremendous potential, especially if educators evolve our instructional approaches. AI agents could help students hone the process of asking intelligent questions, evaluating output, and checking for accuracy and bias. Through those pursuits, one learns how to become a more critical thinker.

AI may also level the playing field for students when their contributions are driven by their ideas, rather than test scores, AP credits, or fluency in a language. But it could also widen gaps. Today, those who stand to gain the most are already privileged with the ability, time, and awareness to use the technology.

In 2001, HAL becomes so self-aware that humans lose control of it. GPT-4 offered advice on a better path forward.

“The parallels between HAL’s journey in the Space Odyssey and our own path serve as both a cautionary tale and a source of inspiration….Technology will continue to advance, and it is our duty to ensure that it augments human values rather than working against them. The key lies in striking a balance between embracing the potential of AI and safeguarding the well-being of humanity.”

Furthermore, the AI suggests, engineers have an opportunity to be a positive influence.

“Additionally, engineers may play a crucial role in educating and empowering the public about AI’s capabilities, limitations, and ethical implications, serving as advocates for responsible AI and sharing their knowledge and expertise to foster a society that can harness the benefits of AI while mitigating its risks.”

I take issue with one word in that response: “may.” Engineers “must” play a crucial role in ensuring that AI contributes to human flourishing. We need to lean into our curiosity and hope for the future, and let our ethics and values drive us as we map our next odyssey.

The view, opinion, and proposal expressed in this essay is of the author and does not necessarily reflect the official policy or position of any other entity or organization, including Microsoft and OpenAI. The author is solely responsible for the accuracy and originality of the information and arguments presented in their essay. The author’s participation in the AI Anthology was voluntary and no incentives or compensation was provided.

Gallimore, A. D. (2023, May 30). Advanced AI: Our Next Odyssey? In: Eric Horvitz (ed.), AI Anthology. https://unlocked.microsoft.com/ai-anthology/alec-gallimore

Alec D. Gallimore

Alec D. Gallimore, Ph.D is the Robert J. Vlasic Dean of Engineering, the Richard F. and Eleanor A. Towner Professor of Engineering, and an Arthur F. Thurnau Professor in the Department of Aerospace Engineering at the University of Michigan. He will assume the role of provost and chief academic officer for Duke University on July 1, 2023.